The AI Readiness Moment for CDOs

Across industries, Chief Data Officers are under pressure like never before. GenAI pilots are accelerating, regulatory scrutiny is tightening, and business leaders expect insights at the speed of decision-making. Yet beneath these ambitions lies a sobering truth: AI is only as good as the data it consumes. When data is incomplete, inconsistent, or delayed, even the most sophisticated models can spiral into biased outputs, operational errors, or regulatory violations.

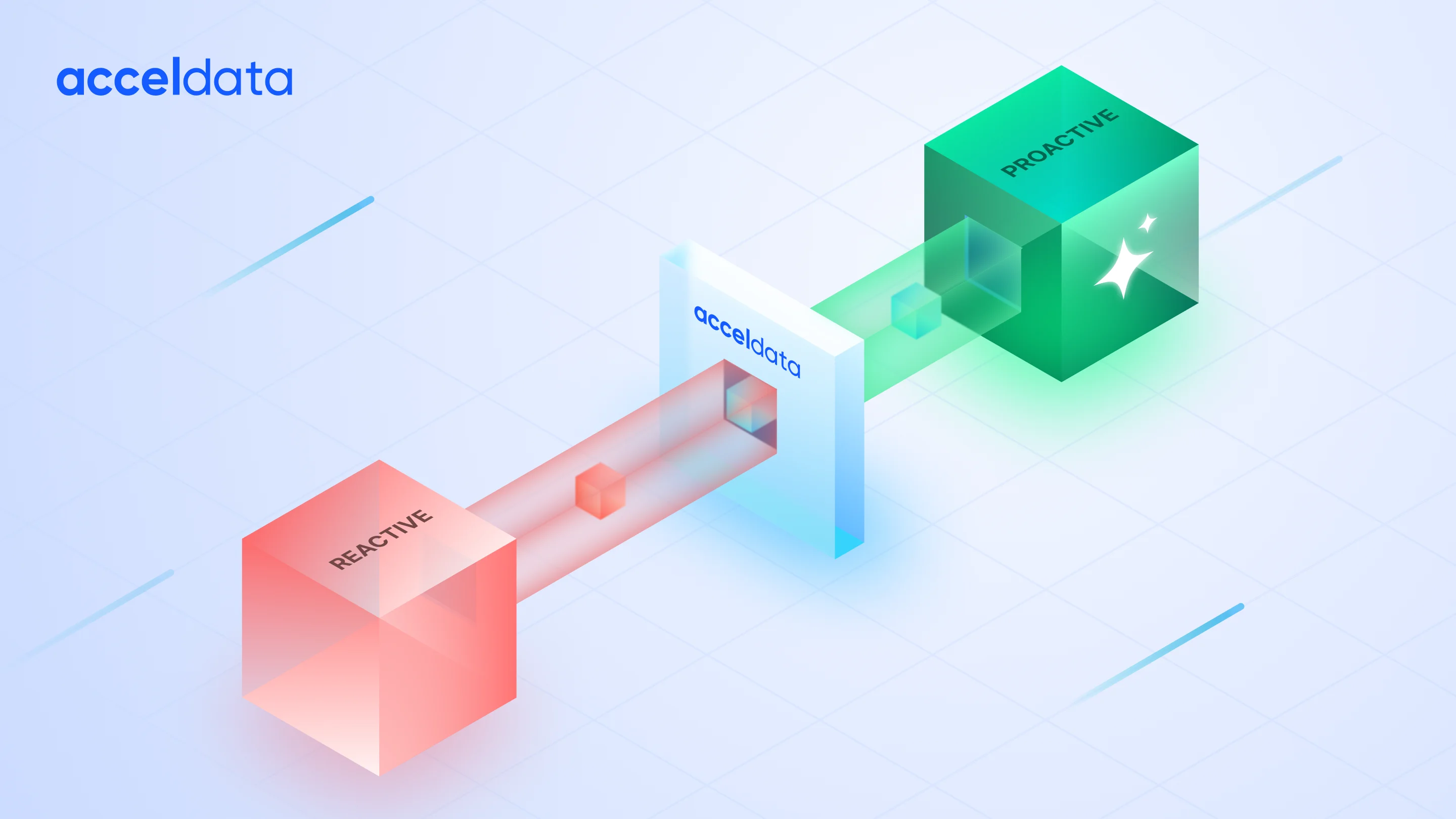

Traditional data quality practices — often reactive and siloed — are no longer enough. Fixing issues after they appear in dashboards or models is akin to discovering a plane’s engine trouble mid-flight. The future of enterprise data strategy hinges on proactive observability — a continuous, anticipatory capability that prevents data problems before they disrupt operations.

73% of data leaders cite data trust as the #1 barrier to scaling AI initiatives. (Source: MIT Sloan, 2025)

The New Complexity of AI-Powered Data Ecosystems

Enterprises once managed relatively contained data ecosystems: curated warehouses, predictable pipelines, and BI dashboards. The rise of AI, and especially GenAI, has shattered this simplicity.

- Data Volume and Velocity: AI models require exponentially larger datasets, refreshed frequently to stay relevant.

- Hybrid and Multi-Cloud Architectures: Data flows across cloud, on-premises, and SaaS silos, creating blind spots in lineage and trust.

- Dynamic Pipelines: Continuous integration/continuous delivery (CI/CD) of data products and AI models introduces constant schema shifts and metadata changes.

- Regulatory Mandates: From Europe’s AI Act to U.S. state-level data privacy laws, enterprises must now prove not just data accuracy, but explainability and compliance.

This complexity multiplies risk. A single undetected anomaly can cascade across dozens of downstream systems — corrupting AI model outputs, eroding stakeholder trust, and triggering compliance breaches.

“Observability is no longer optional hygiene — it’s the backbone of AI governance and innovation.”

Why Reactive Data Practices Are Failing

Most organizations still rely on reactive methods: waiting for end users to flag a bad report, or for downstream KPIs to reveal data drift. These approaches are slow, costly, and increasingly untenable in AI contexts:

- Latency: Issues discovered downstream delay model retraining and insight delivery.

- Cost Escalation: Fixing data problems after deployment consumes 3–10x more resources than addressing them upstream.

- Eroded Trust: Executives lose faith in AI initiatives when “black box” errors emerge in production.

- Compliance Gaps: Regulations increasingly require proactive monitoring and auditable controls — not post-hoc fixes.

In short: reactive approaches cannot keep pace with AI-scale data ecosystems.

Proactive Data Observability: A Strategic Shift

Proactive data observability is not just a technology capability; it is a leadership mandate. It combines continuous monitoring, automated anomaly detection, and deep context into a single strategic layer spanning the enterprise data fabric.

Key principles include:

- Continuous Monitoring Across the Data Lifecycle

Observability must extend beyond ingestion into every stage — from source systems and transformation layers to AI model training and inference pipelines. - Context-Rich Insights, Not Just Alerts

Modern observability systems connect anomalies to lineage, ownership, and downstream impact, enabling faster root-cause analysis and prioritization. - Embedded in Data Contracts and Governance

Observability must be integral to data contracts, SLAs, and governance frameworks — ensuring reliability is baked into every data product by design. - AI-Native Adaptation

As AI pipelines evolve dynamically, observability must adapt in real time, learning patterns and anticipating drift or bias before models degrade.

Boardroom Checkpoint for CDOs:

“Can we trace, trust, and troubleshoot our AI pipelines before the board asks why a model failed?”

The CDO’s Leadership Imperative

For CDOs, the shift to proactive observability is more than operational hygiene — it is foundational to enterprise transformation. Three strategic imperatives emerge:

1. Champion Data Trust as a Business Enabler

AI initiatives live or die on executive trust. By embedding observability into the data strategy, CDOs can confidently assure boards and regulators that AI outputs are grounded in reliable, explainable data.

2. Embed Observability into AI and Data Product Lifecycles

Proactive monitoring must become part of every sprint, every pipeline, every release cycle — not an afterthought. This requires cultural change: data engineers, analysts, and AI teams must see observability as everyone’s responsibility.

3. Align Observability with Regulatory and Ethical Mandates

CDOs must stay ahead of evolving AI governance standards. Proactive observability provides the auditable trail regulators demand and the ethical assurances customers expect.

Observability as the Foundation for Innovation

The most advanced AI-ready enterprises treat observability as a platform capability — not a bolt-on. When observability is continuous and proactive, organizations gain three compounding advantages:

- Speed to Insight

Pipelines remain reliable, reducing downtime and accelerating time-to-market for AI products. - Risk Mitigation

Anomalies are detected and addressed upstream, preventing costly rework and reputational harm. - Innovation at Scale

Trusted data unlocks confidence to experiment — whether launching personalized retail experiences, optimizing supply chains, or advancing clinical research.

In essence, observability fuels both control and creativity — the dual mandate of modern CDOs.

Practical Steps to Embed Proactive Observability

How can CDOs operationalize this vision? Consider these starting moves:

- Audit Your Current State: Map your data landscape to identify blind spots in pipeline monitoring, lineage, and quality checks.

- Prioritize High-Impact Pipelines: Focus first on AI training data, mission-critical metrics, and regulatory-reporting flows.

- Integrate with Governance: Embed observability into data contracts, catalog metadata, and compliance workflows.

- Automate and Scale: Leverage anomaly detection, lineage tracing, and self-healing where possible to reduce human toil.

- Create Executive Dashboards: Provide real-time health indicators to leadership, reinforcing the value of observability investments.

“The organizations that thrive in the GenAI era won’t simply build better models — they will trust their data to fuel them.”

A Vision for the AI-Ready Enterprise

Proactive observability is more than an operational fix; it is a strategic differentiator. The enterprises that thrive in the GenAI era will not simply manage data pipelines — they will orchestrate trusted, explainable, and adaptive data ecosystems.

For CDOs, this is the moment to lead: to redefine data strategy around trust, agility, and foresight.

Call to Action for CDOs

- Assess your readiness: Do you have continuous visibility into your AI pipelines and data contracts?

- Elevate observability to a board-level priority: Position it alongside AI ethics, governance, and innovation strategy.

- Lead the cultural shift: Ensure every team understands observability as essential to business outcomes, not optional tooling.

The organizations that make this shift today will be tomorrow’s AI leaders — not because they built better models, but because they trusted their data to fuel them.

Ready to Go Deeper?

Explore our Executive Guide — The New Imperative for Trusted, AI-Ready Data — for practical strategies and frameworks to accelerate your observability journey.

.webp)

.webp)

.webp)