Who’s that dark shadow at your office door? Is it your (un)friendly neighborhood data engineer, looking like the world has come to an end?

And when you suggest a different data set, do they sigh deeply and reply with a look of confusion and bewilderment?

Look, you are not alone. Listening to colleagues and customers, I know that data quality and data reliability have become *huge* problems in many companies. Data engineers are overwhelmed, and 97% report experiencing burnout in their day-to-day jobs.

Either way, this creates data downtime, and it plagues the companies that can least afford it — those that have transformed into data-driven digital enterprises and are relying on analytics to make mission-critical operational decisions, often in near-real-time.

Why Is There So Much Bad Data?

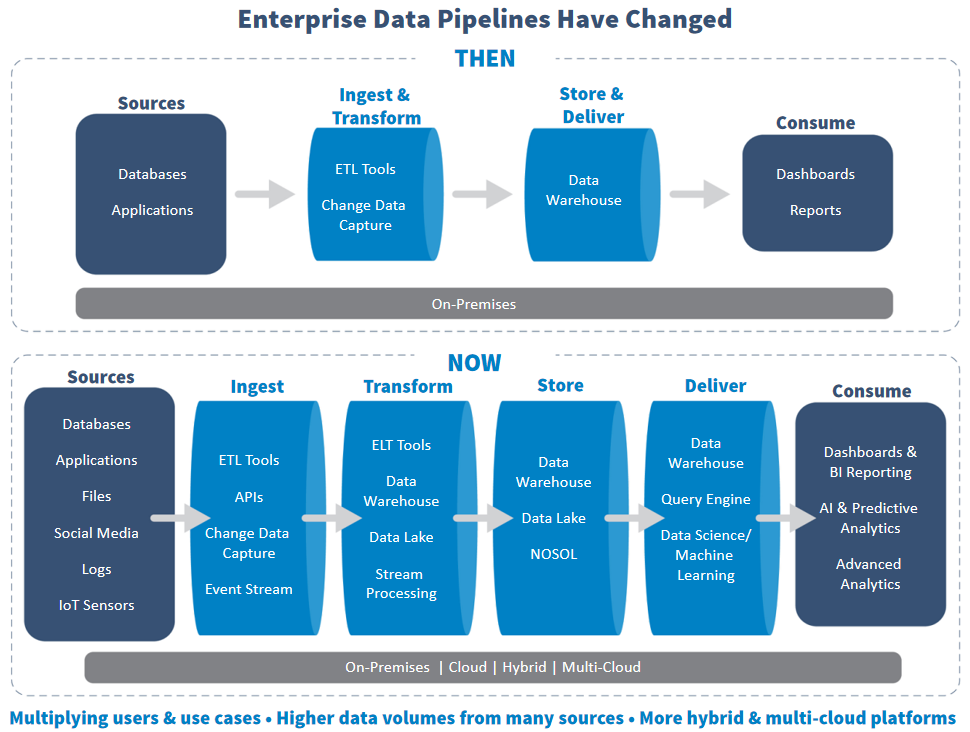

There are lots of causes for today’s epidemic of bad data. They all boil down to how data pipelines have fundamentally changed.

1. Data Pipeline Networks are Much Bigger and More Complicated

As you can see from the chart below (available in this Eckerson Group white paper), the data lifecycle used to be pretty simple. Data in an application or SQL database was ingested into a data warehouse via a basic ETL or CDC tool, sometimes transformed, cleaned and tested for quality along the way. From there, it was stored and transmitted onward to a simple analytics application, which crunched the data and produced a weekly or monthly report or a dashboard.

Building a data pipeline for a new analytics report or a new data source took a lot of effort. And so the gatekeepers — business analysts and database administrators — carefully weighed every data change. And so data stayed on the static side.

To meet the ongoing explosion in the supply of data inside businesses, vendors have released a variety of tools making the creation of data pipelines easier than ever. And so modern data architectures have ballooned, growing bigger and more complicated in a short period of time.

Now, there are many more data sources, including real-time streaming ones. There is also a wider variety of data stores, most notably cloud data lakes that IT has embraced for their flexibility and scalability, as well as NoSQL data stores and good old legacy data warehouses, which are too mission-critical to turn off and too expensive to migrate. Some data is connected directly to an analytics application, while much of it is transformed or aggregated while traveling through a data pipeline, where it is stored again.

Many businesses spent the last couple of years frantically building out massive networks of data pipelines — without corresponding data observability and management tools. And now they are reaping what they sowed: a crop of data errors. That’s because...

2. Data Pipelines are More Fragile Than Ever

Why are today’s data pipelines, in the words of the analysts Eckerson Group, such “fragile webs of many interconnected elements?”

After all, doesn't the latest generation of tools for creating data pipelines, including ETL, ELT, APIs, CDC, and event streamers (e.g. Apache Kafka), boast more powerful data ingestion, transformation and quality features than before?

Well, that is part of the problem. Companies are doing many more things with their data that would have been unheard of a decade — just because they can. They are combining data sets from wildly different sources, data formats, and time periods — and they are doing these transformations and aggregations multiple times, creating lengthy data lineages (more on that later). They are also interconnecting data sources and analytics applications from very different parts of the business.

However, every time data is aggregated or transformed, a chance for a problem can occur, whether it be missing or incorrect data, duplicated or inconsistent data, poorly-defined schemas, broken tables, or more.

New forms of data pipelines can also be more prone to problems. For instance, real-time event streams can be vulnerable to missing data if a hardware or network hiccup arises. And the sheer diversity of tools creates greater chances for data engineers, especially poorly-trained ones, to inadvertently cause the quality of a data set to degrade.

3. Data Lineages are Longer than Before

Not all data is created equal. Some datasets are more valuable than others. For those, they tend to be constantly used, reused, transformed, and combined with other datasets. Their data lifecycle can be extremely long.

But what if an error emerges in this key dataset? To track down the error, you would go through the data lineage all the way back to the original data set. Looking at all of the dependencies and figuring out where things went wrong is always time consuming. But it can also be impossible if the information — the dataset’s metadata — is incomplete or missing.

And that’s very possible. Because most traditional data catalogs and dictionaries do very little to create data lineage metadata around how the data is used, to which other assets it is connected, etc. Rather, data engineers were expected to manually do most of the categorization and tagging themselves. Of course, in the rush to build out their data pipelines, many engineers skipped the metadata documentation, only to regret it once data quality problems emerged that they needed to track down.

4. Traditional Data Quality Testing is Insufficient

Traditionally, data quality tests are only performed once, when the data is ingested into the data warehouse.

But as discussed, every time data travels through a data pipeline, quality can degrade for any of the reasons listed above.

Data quality needs to be tested continually throughout its lifecycle. Moreover, it should be tested proactively, rather than in reaction to a data error. At that point, any delay in fixing the error could cost your business a lot of money. Unfortunately, classic data quality monitoring tools only do that. They can only monitor for problems, rather than help you identify and stop problems before they occur.

5. Data Democracy Applies to Data Pipelines, Too

For sure, I am not against citizen data scientists and self-service BI analysts. For analytics to become more embedded into every key operational decision or customer interaction, it must become available to many more job titles in the business.

However, the reality is that data democratization can worsen data quality and reliability. Part of that is because employees who don’t work with data full-time will be less skilled at creating data pipelines.

The bigger issue is that data democracy loosens up formerly tightly-controlled processes and workflows. So, for instance, a citizen data scientist may lack historical knowledge about missing or incomplete values in a certain data source. Or they may be more prone to forget to enter key metadata around a newly-created dataset, making data lineage difficult to track.

On the whole, data democracy is a good thing. But unless the right data observability tools are deployed, the tradeoff can be very painful.

What’s the Solution?

Every one of the five issues cited above can be successfully addressed — just not with the current crop of data monitoring and governance tools. They are too reactive, too manual, and too lacking in their own analytics abilities.

What’s needed is a modern data governance and data quality solution, one that provides:

- Automated data discovery and smart tagging that makes tracking data lineages and problems a piece of cake

- Constant data reliability and quality checks, rather than single-moment-in-time testing of classic data quality tools

- Machine learning-powered predictive analyses and recommendations that ease the workload of data engineers, who are currently either bombarded with too many alerts or not enough.

- A 360-degree view of your entire data infrastructure that can identify the main sources of truth, prioritize validating the data there and monitoring the data pipelines originating there for potential problems.

The Acceldata Data Observability Platform is a full-fledged, modern approach to solving data quality in today’s complex and heterogeneous environment. Contact us to get a demo of the Acceldata Data Observability Platform.

.webp)

.webp)

.webp)