1. Two Worlds, One Enterprise

The modern enterprise has long thrived on deterministic systems—processes built on clear rules, repeatable outcomes, and predictable inputs. These systems form the bedrock of operational reliability, regulatory compliance, and customer trust. Whether it’s financial reporting, supply chain orchestration, or customer onboarding, deterministic logic ensures that business processes remain consistent, traceable, and controllable.

But with the rise of AI and particularly Large Language Models (LLMs), a new type of intelligence has entered the enterprise ecosystem: probabilistic reasoning. Unlike deterministic systems, LLMs interpret, predict, and suggest rather than follow strict instructions. Their outputs are based on likelihoods, trained on vast datasets, and informed by patterns that are not always immediately explainable. In other words, they don’t just answer questions—they estimate answers based on context.

This introduces a fundamental tension. What happens when a system that is inherently probabilistic is embedded within a deterministic enterprise workflow? The result is a new paradigm—one where the precision of rules must coexist with the flexibility of predictions. Enterprises must adapt not by choosing one over the other, but by learning when and how to combine them effectively.

In this article, we’ll explore this balance, offer practical frameworks, and highlight how leading organizations are already navigating the convergence of deterministic control and probabilistic intelligence.

2. Why This Tension Exists

At the heart of this challenge lies the mismatch between expectations and behavior.

Deterministic systems are designed for consistency and control. They follow pre-defined rules and deliver the same output given the same input. This predictability is essential in regulated industries like finance, healthcare, manufacturing, and logistics, where compliance, auditability, and precision are non-negotiable. These systems are the foundation of trust between enterprises and their customers, regulators, and stakeholders.

Probabilistic systems, on the other hand, excel in complex, ambiguous, and context-rich environments. LLMs, by design, generate responses based on patterns and probabilities, often introducing variability into their outputs. While this enables them to handle natural language, infer intent, and summarize large documents, it also introduces uncertainty—a characteristic that can feel risky to enterprises accustomed to deterministic clarity.

Consider a few enterprise examples:

- A customer support chatbot powered by an LLM might generate varied—but accurate—answers to the same question depending on phrasing and context. A deterministic script would always give the same answer, regardless of nuance.

- A compliance check for a banking transaction must be repeatable and justifiable. Here, a rule-based system prevails, but AI might augment it by flagging edge cases based on anomalies.

- An AI-generated product description for ecommerce is probabilistic creativity at work, but feeding that into a rigid catalog management system without validation can create inconsistencies.

This is where the tension emerges: the enterprise wants both innovation and control. Probabilistic systems threaten perceived reliability; deterministic systems hinder flexibility and scale.

Rather than choosing sides, forward-looking enterprises are realizing they need a new approach—one that blends confidence scoring, exception handling, and human-in-the-loop oversight. In the next section, we’ll explore how to design a hybrid operating model that plays to the strengths of both paradigms.

3. From Rules to Reasoning: The Intelligence Spectrum

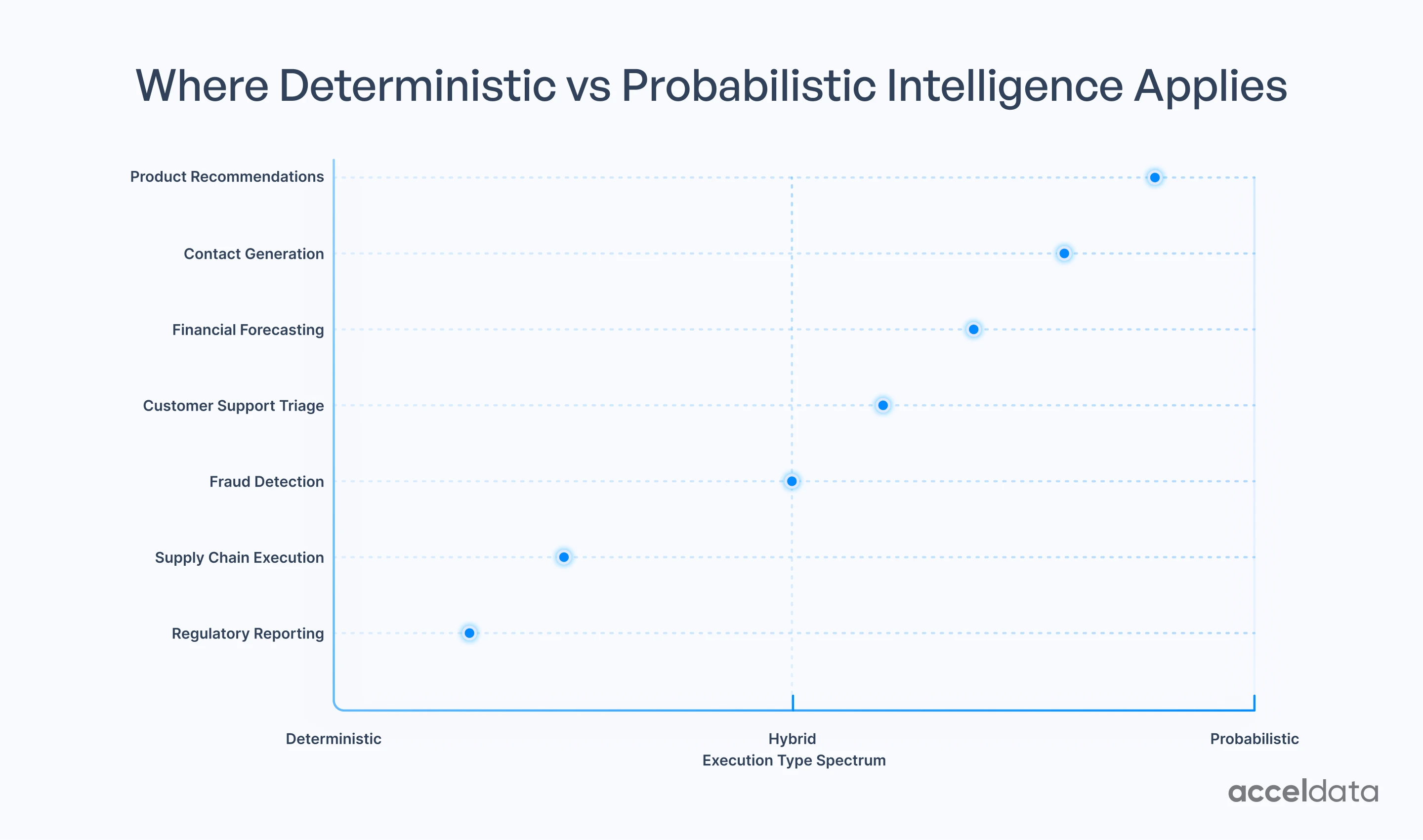

To help enterprises navigate where and how to apply probabilistic vs. deterministic logic, consider the following framework. It maps common enterprise tasks along a spectrum where either deterministic logic or probabilistic reasoning tends to dominate, acknowledging that most real-world processes contain elements of both:

This spectrum highlights that most enterprises don’t operate in extremes. Instead, different workflows require different types of reasoning. The goal is not to force everything into one.

model, but to architect systems that leverage each predominant paradigm where it excels. To see how this spectrum is playing out in the real world, let’s look at how leading enterprises are blending these approaches across industries.

4. Industry Examples in Action

To illustrate how this balance is playing out across sectors, here are real-world examples where enterprises blend deterministic and probabilistic approaches:

Finance — JPMorgan Chase

Uses deterministic systems for compliance, while probabilistic AI (via its COiN platform) accelerates legal document review, flagging anomalies for human validation.

Retail — Walmart

Combines deterministic supply chain execution with AI-driven demand forecasting and dynamic pricing recommendations.

Healthcare — Mayo Clinic

Maintains deterministic treatment protocols but leverages probabilistic AI to interpret unstructured clinical data and flag potential diagnoses.

Consumer Packaged Goods — Unilever

Applies deterministic quality controls on production lines, enhanced by AI analysis of unstructured consumer feedback across digital channels.

Technology — Salesforce Einstein

Integrates into deterministic CRM workflows, offering probabilistic lead scoring to guide (but not override) sales decisions.

These examples show a broader trend: enterprises are not abandoning rules—they are augmenting them with reasoning.

5. Designing a Hybrid Operating Model — The Agentic Autonomy Curve

To successfully integrate probabilistic and deterministic systems, enterprises must design hybrid workflows that deliberately balance the strengths of both approaches. This means more than simply embedding an LLM in a business process—it means redefining how tasks are routed, governed, and interpreted.

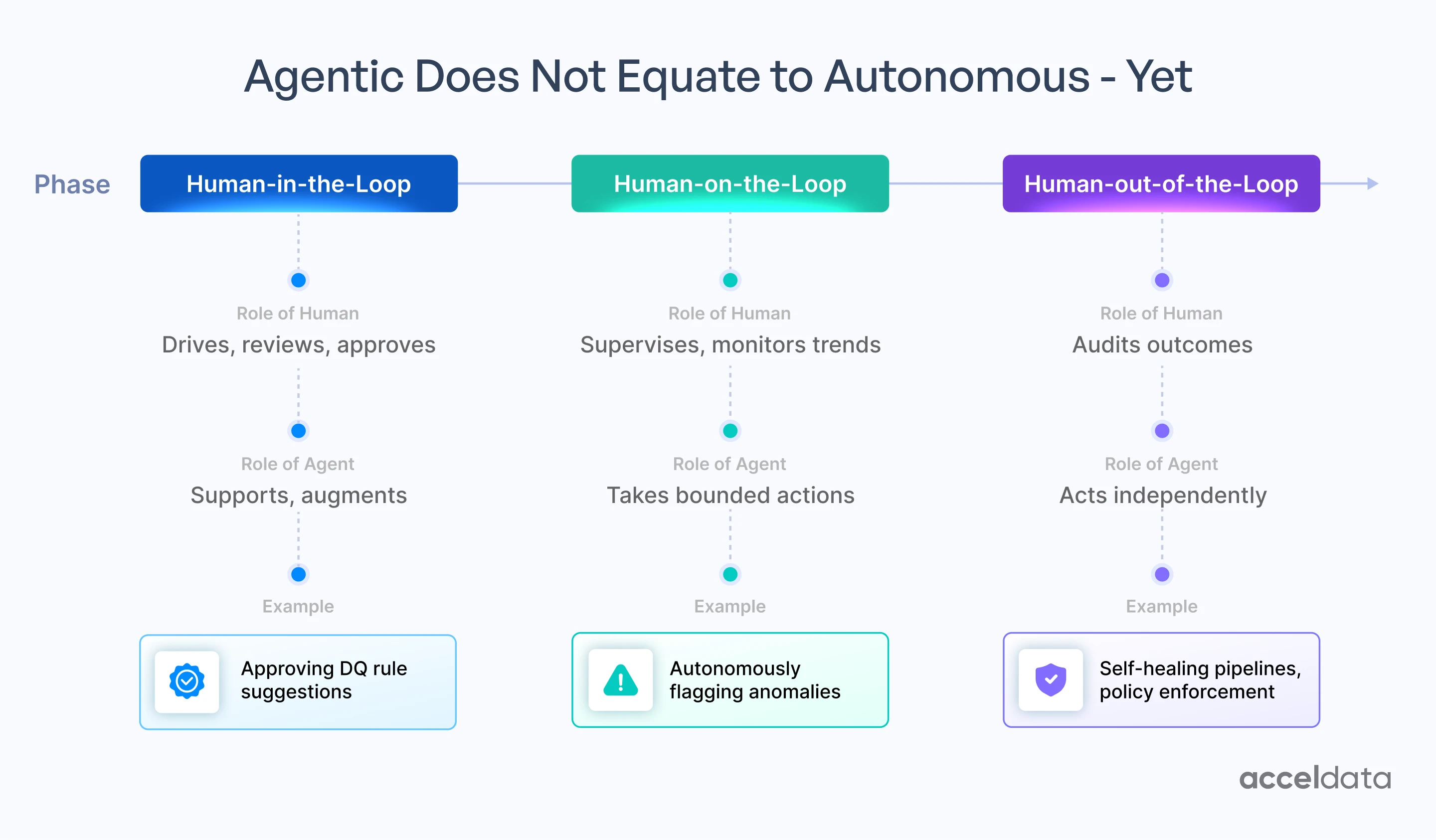

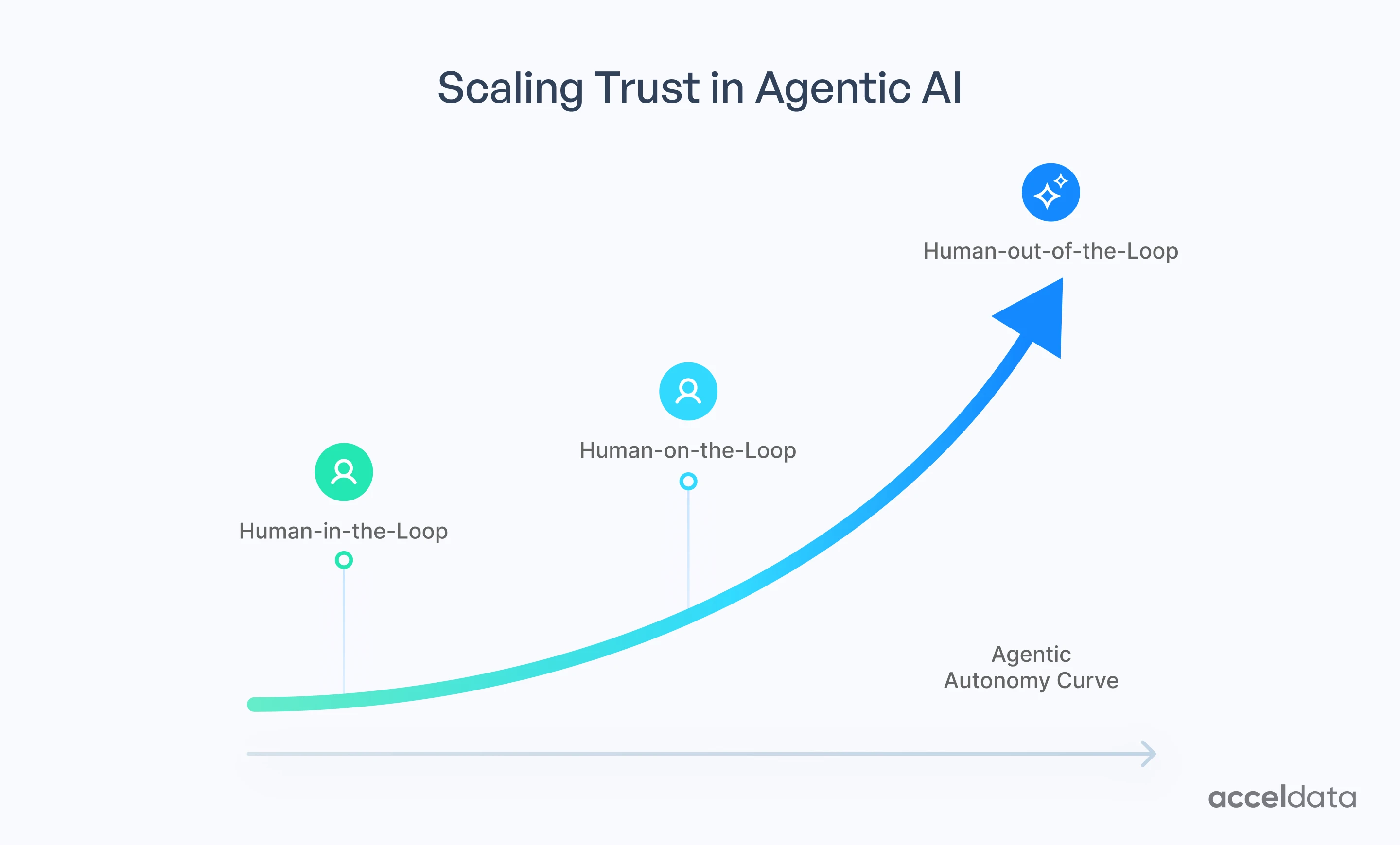

One of the most practical ways to understand this evolution is through what we call the Agentic Autonomy Curve—a maturity model that maps the progression of human oversight as enterprises build trust in agentic AI systems:

Agentic systems evolve along a spectrum of autonomy. In the early stages, agents support and augment humans with tight review loops. As trust increases and performance improves, agents take bounded actions under supervision. Ultimately, some reach a stage where humans audit the outcomes post-facto, and agents operate autonomously within policy-driven constraints.

Human-in-the-Loop

- Role of Human: Drives, reviews, and approves decisions

- Role of Agent: Supports and augments

- Example: Approving AI-generated data quality rule suggestions

- Design Principles: Apply strict confidence thresholds; maintain deterministic validation as final gate

Human-on-the-Loop

- Role of Human: Supervises and monitors trends

- Role of Agent: Takes bounded actions

- Example: Autonomously flagging anomalies in production pipelines

- Design Principles: Let agents act within safe zones; use policies to define operational boundaries

Human-out-of-the-Loop

- Role of Human: Audits outcomes after the fact

- Role of Agent: Acts independently

- Example: Self-healing pipelines, autonomous policy enforcement

- Design Principles: Require full observability and traceability; agents must operate within well-defined, policy-based boundaries

These levels of oversight are not isolated—they represent key inflection points along the Agentic Autonomy Curve, a framework for scaling AI-driven decision-making responsibly. As confidence in agent behavior increases, enterprises move along the curve toward greater autonomy while maintaining governance.

Each stage aligns with the broader hybrid operating model principles:

- Confidence-Threshold Triggers – Define the level of certainty required before an agent can act without human intervention.

- Rule-Based Guardrails – Ensure agents operate within clearly defined business and regulatory boundaries.

- Human Oversight as Needed – Embed human review where ambiguity, risk, or novelty requires judgment.

- Context-Preserving Architecture – Provide agents access to meaningful, cross-domain context in real time.

- Progressive Autonomy – Scale agent capabilities gradually based on performance and organizational trust.

Enterprises should approach this maturity curve intentionally—starting with support, moving toward supervised action, and eventually enabling autonomous operations where justified.

A well-designed hybrid model doesn’t just improve efficiency—it builds resilience. It allows enterprises to scale the power of AI without compromising the transparency, accountability, and auditability that deterministic systems ensure.

6. Strategic Recommendations for Enterprise Leaders

As enterprises move toward embedding agentic AI systems into their operations, success will depend on their ability to navigate the balance between flexibility and control, innovation and governance. Based on the principles explored in this article, here are key strategic recommendations:

- Map Use Cases Along the Spectrum

Classify enterprise workflows based on their level of ambiguity, structure, and risk. Apply deterministic systems where repeatability is critical and probabilistic reasoning where variability and nuance dominate. - Design for Oversight, Not Overhaul

Introduce agents first in advisory or human-in-the-loop capacities. Use confidence thresholds, fallback mechanisms, and audit trails to control escalation and ensure trust. - Invest in AI-Ready, Converged Data Platforms

Adopt architectures that support both structured and unstructured data and provide the adaptability, context, and governance needed for intelligent agents to operate confidently and responsibly across your business.

Adopt architectures that allow agents to reason with structured and unstructured data simultaneously. Avoid treating AI as a bolt-on—design for embedded context. - Balance Innovation with Guardrails

Empower agents with autonomy—but always within boundaries defined by policies, risk thresholds, and business logic. Trust is not a given—it’s earned. - Treat AI Governance as a First-Class Concern

Monitor, measure, and document agent decisions. Develop new metrics and reviews for probabilistic accuracy, bias, and drift. Governance can’t be retrofitted once agents are in flight. - Commit to Continuous Training and Human-AI Teaming

Your people will adapt alongside the AI. Invest in upskilling, change management, and human-in-the-loop design to create symbiotic workflows, not adversarial ones.

By following these principles, enterprises will not just deploy AI—they’ll design resilient, adaptive systems where agents and humans complement each other’s strengths.

Postscript: Why OpenAI's Forward-Deployed Engineers Validate the Agentic Transition

OpenAI’s recent enterprise engagements underscore everything discussed in this article. While OpenAI is known for building some of the world’s most advanced LLMs, it isn’t simply delivering APIs to enterprises—it’s embedding Forward Deployed Engineers (FDEs) to make those models operational in real-world workflows.

These engineers aren’t traditional consultants. They’re tasked with solving the very challenge explored here: how to introduce probabilistic agents into deterministic environments without compromising trust, safety, or compliance.

FDEs help enterprises:

- Translate probabilistic outputs into deterministic actions.

- Design policy wrappers, confidence thresholds, and human-in-the-loop controls.

- Ensure that model outputs align with the rules and constraints of enterprise systems.

In short, OpenAI’s FDEs are architects of the Agentic Autonomy Curve. They help enterprises find the right entry point, scale with intention, and ensure that LLMs don’t become liabilities within rule-bound systems.

This move validates a larger truth: AI is not plug-and-play. It requires deep architectural thinking, cross-functional collaboration, and new operational roles. The most advanced model in the world still needs a human systems engineer to ensure it works in the enterprise.

That’s not a weakness. It’s a new frontier in how we build human-AI systems—together!

.png)

.png)

.webp)

.webp)