The Quick Guide to Snowflake Data Pipelines

Data pipelines are a critical component of any organization’s data operations strategy. For Snowflake users, a Snowflake data pipeline enables organizations to collect, store, process, and analyze data across the entire data journey. There may be a tendency to view them simply as conductors of data, and while that’s not incorrect, it doesn’t do justice to the enormous role they play, and the work required to develop and manage them.

Building and maintaining data pipelines is an ongoing, continuously-evolving proposition. No organization operates with a finite set of data. The very nature of the modern data stack is that it is always ingesting and processing new data and adding new data sources, while all the while disposing (if they’re judicious about data maintenance) of data that’s no longer usable. To make that all happen, data teams are constantly integrating new applications and routing data through data warehouses like Snowflake, Databricks, and Redshift. Data engineers who handle data pipelines don’t live by a “set it and forget it” ethos. They are actively engaged with ensuring that data is accurate, timely, and reliable.

In this blog, we’ll explain how to build a data pipeline effectively and how organizations can create and maintain visibility into their Snowflake data pipelines.

Data Orchestration With Snowflake

Modern data teams have come to rely on Snowflake as the cloud-based data warehouse platform of choice. It enables organizations to store, process, and analyze large amounts of data. The platform provides a secure, scalable, and cost-effective way for organizations to store and query their data. By leveraging data warehousing, analytics, machine learning, and more, Snowflake can help organizations in a variety of areas such as business intelligence, analytics, reporting, operational insights, customer segmentation, and personalization.

Data orchestration is the process of managing and coordinating data flows between different systems. It involves scheduling, automating, and monitoring data pipelines to ensure that data is moved from one system to another in an efficient and secure manner. Snowflake enables and improves data orchestration by providing a cloud-based platform for managing all aspects of the data pipeline. Snowflake data orchestration allows users to easily create workflows for moving data between various systems, as well as schedule jobs for running those workflows on a regular basis.

Building Data Pipelines for Snowflake

One of the most important elements of building a Snowflake data pipeline is the versioning feature provided by Snowflake’s platform. Snowflake data versioning allows users to keep track of changes made to the database over time so they can easily roll back any changes if needed without having to manually recreate them from scratch each time there is an issue or bug in the system.

Snowflake's “Time Travel” versioning feature enables teams to collaborate more effectively since everyone involved in developing or managing the database will have access to all versions of the data both current and past. This can help reduce confusion stemming from miscommunicated changes or work performed simultaneously on different database versions.

How to Build a Data Pipeline in Spark

Enterprise data teams should also consider using Apache Spark when building data pipelines in Snowflake. Snowflake's connector for Apache Spark is a powerful tool that enables users to easily and efficiently transfer data between Snowflake and Spark. It allows users to read, write, and transform data from Snowflake to Spark and enables users to leverage the scalability of Spark while also taking advantage of Snowflake's features.

The Acceldata’s Snowflake solution aligns cost/value & performance, offers a 360-degree view of your data, and automates data reliability and administration. Take a tour.

Snowflake Data Pipeline Example

Data pipelines are automated processes that move data from one system to another. They can be used to extract, transform, and load (ETL) data from a variety of sources into a target system. Data pipeline examples fit into an organization's overall data ecosystem by providing an efficient way to transfer data between systems and ensuring that the data is accurate and up-to-date.

Snowflake Streams and Tasks Example

A common Snowflake pipeline example involves using Snowflake Streams and Tasks to ingest streaming data from external sources such as webhooks or message queues, process it with custom logic, then write it to a database table or file format for further analysis. Snowflake Tasks can also be used for scheduling periodic jobs such as running SQL queries or loading files into tables on a regular basis.

There are many different types of tools available for creating and managing data pipelines, including open-source software and commercial solutions. Regardless of the tool an organization chooses, the goal is typically the same: to create reliable workflows that move large amounts of structured or unstructured data quickly and reliably between systems.

Snowflake Continuous Data Pipeline

In the most basic sense, a Snowflake continuous data pipeline is a data processing architecture that allows enterprises to quickly and efficiently process massive amounts of data in real-time (think in terms of petabytes, which actually, is fairly standard for enterprises these days). It is designed to continuously ingest, process, and store data from multiple sources.

Data Pipeline vs. ETL (And Why Automation is Essential)

Data pipeline examples include ETL (extract-transform-load) processes which require ETL pipeline tools to extract raw source files from one system, transform them into a usable format such as CSV files or tables, and finally load them into another system such as a database or analytics platform like Snowflake for further analysis. It’s important to always use Snowflake ETL best practices when using it for ETL processes.

Data teams should recognize that building ETL scripts manually is a recipe for an uncontrollable tsunami of data. Manual scripts cannot handle incoming real-time data streams and are incapable of analyzing data in a real-time way. Automating ETL validation scripts improve data validation, and data teams that want to have control over data operations and ensure effective cleansing and validation of data, are increasingly using an automated approach.

Any data team leader knows the perils of relying too heavily on ETL for data pipeline performance. It’s not that ETL processes are incapable of delivering necessary data, but it’s the repetitive tasks such as running ETL validation scripts that can eat valuable time for highly skilled (and highly compensated) data engineers.

These time-consuming tasks create an unnecessary burden and prevent data pipelines from delivering on their ability to optimize Snowflake capabilities. Manually writing ETL validation scripts, cleaning data, getting it prepared for consumption, and dealing with ongoing data problems limit what data teams can accomplish.

Organizations use continuous data pipelines to enable rapid access to data-derived insights. The Snowflake continuous data pipeline is one example of a powerful and reliable solution for organizations looking to build a continuous data pipeline. Snowflake provides the ability to ingest streaming or batch-based data from various sources and enables organizations to capture changes in their databases in real time.

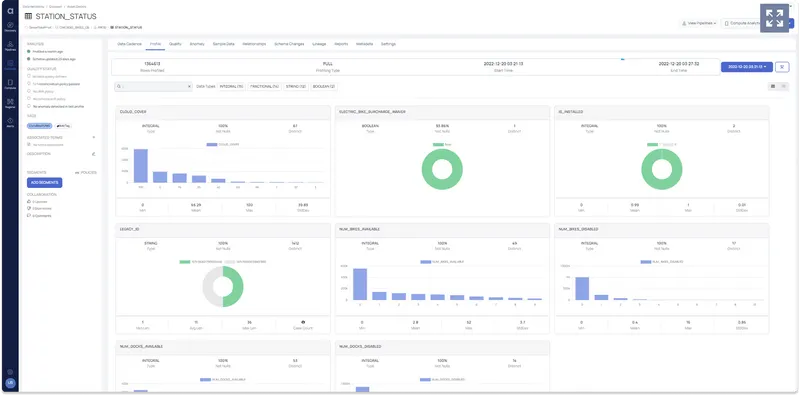

Snowflake users who apply Acceldata’s Data Observability platform get a comprehensive view into how that data is being used across their environment. With so much activity both inside and outside these pipelines, all of which impacts data operational performance, Acceldata users can track end-to-end pipeline performance (both inside and outside of Snowflake). Moreover, they can improve data reliability by monitoring common data issues like schema drift, data drift, and data quality monitoring with the help of data observability.

Data Pipeline Stages

Snowflake data pipelines typically involve four stages: ingestion, transformation, storage, and analysis. Let’s look at these more closely, and evaluate their importance to the data pipeline architecture:

Ingestion

This is the first stage of the data analytics pipeline where raw data is collected from various sources such as databases, web APIs, or flat files. This stage involves extracting the data from its source and loading it into a staging area for further processing.

Transformation

In this stage of the pipeline, raw data is transformed into a format that can be used for analytics and machine learning purposes. This includes cleaning up any inconsistencies or errors in the data as well as applying any necessary transformations to make it easier to work with.

Storage

Once the data has been transformed, it needs to be stored somewhere so that it can be accessed by other data pipeline components. This could include storing it in a database or on cloud storage services.

Analysis

The final stage of the pipeline involves analyzing and interpreting the data using data pipeline tools such as machine learning algorithms or statistical methods. This allows organizations to gain insights from their datasets that can help inform decision-making processes and improve operational efficiency.

Acceldata for Snowflake

Organizations can use Acceldata to gain more reliable insights into their Snowflake data environments. Snowflake users rely on Acceldata to do the following:

- Improve resource utilization with improved cost intelligence, anomaly detection, usage guardrails, overprovisioning detection, and more.

- Increase trust in your data with better end-to-end data pipeline observability thanks to features like quality and performance tracking, automated DQ monitoring, schema drift, and more.

- Optimize performance and configuration with notification or process triggers for best practices violations, advanced performance analytics capabilities, and account health monitoring.

The Acceldata’s Snowflake solution aligns cost/value & performance, offers a 360-degree view of your data, and automates data reliability and administration. Take a tour.

.png)

.webp)

.webp)