What is Data Pipeline Monitoring?

What is Data Pipeline Monitoring?

Data pipeline monitoring is an important part of ensuring the quality of your data from the beginning of its journey to the end. Improving your data pipeline observability is one way to improve the quality and accuracy of your data.

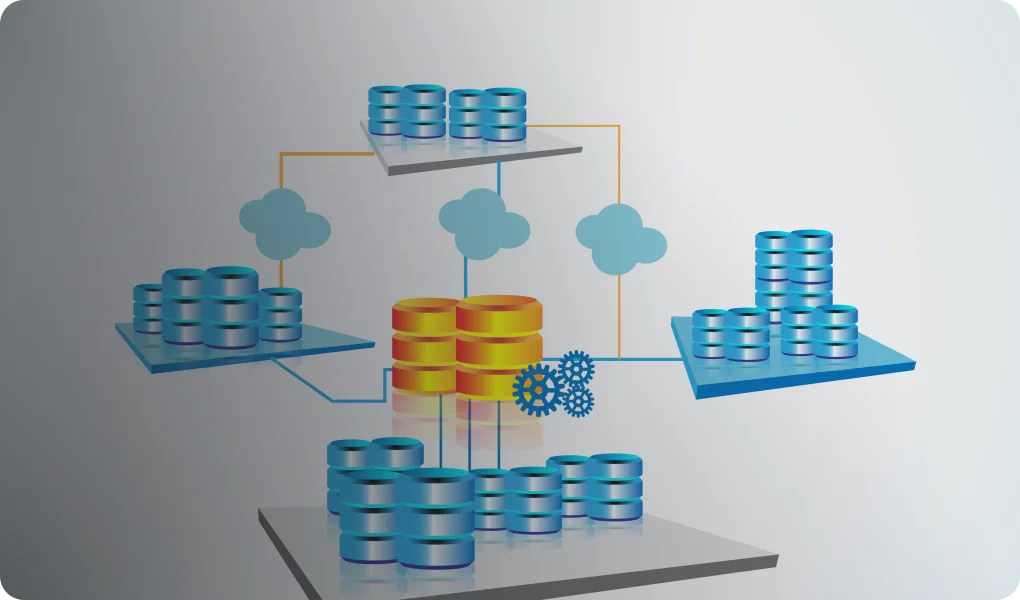

The concept of data observability stems from the fact that it’s only possible to achieve the intended results with a complex system if the system is observable. Data pipeline monitoring is a way to make sure your data is observable. Modern data systems are so complex that data observability tools are practically a requirement. Organizations have so much data coming in from so many different sources that without a way to control it and observe it, the data would be next to useless.

There are three main categories of tools you can use to help with data pipeline monitoring, known as the three data observability pillars. These three pillars are Metrics, logs, and traces. We’ll go into further detail about each of these pillars below. You can also use other tools to monitor data pipelines, such as open source data observability tools. Regardless of the kinds of tools you choose to use for data monitoring, maintaining full data pipeline observability is essential for verifying the quality of the data your organization uses.

Data pipeline monitoring is just the first step in the process of optimizing your data pipelines. Once your monitoring tools have notified you of a problem, the root cause needs to be identified and resolved. Anomaly detection in machine learning can improve the odds of predicting and preventing data pipeline issues before they occur.

Data Pipeline Monitoring Tools

There are a few different data monitoring tools you can use to monitor your data pipeline. Three of these kinds of tools are called the three pillars of data observability. They include the following:

Metrics

Metrics are some of the most valuable data monitoring tools. Your systems and applications are constantly generating information about their performance that you can study in order to better understand how your data pipelines are functioning. If you’re trying to verify the health of your data, metrics are a great place to start.

However, one of the challenges of using metrics as data quality monitoring tools is that they represent a vast amount of information from a wide variety of sources. This makes it very difficult to glean any useful insights from them without an appropriate system for organizing and interpreting metrics.

Logs

Logs can also be used to monitor the quality of your data. Logs are a great tool for observability because logs are used to keep information about nearly every system. Logs can be a good alternative to metrics because they often provide a higher level of detail about the information than metrics do.

However, just like with metrics, the main drawback of using logs as data pipeline monitoring tools is that they provide a huge amount of information. This can make it difficult to keep track of the information efficiently enough for it to be useful. Using a tool for storing and managing logs, as well as collecting only the logs that are most important, are great ways to offset this disadvantage.

Traces

The third data observability pillar that can help you monitor your data pipeline is traces. Trace data gets its name from the way it traces information about the way applications operate. Trace data is great for evaluating the quality of data coming from specific applications.

The downside of using trace data is that it does not provide much context. Traces will only tell you about the application the data comes from; they will not tell you anything about the whole infrastructure. It’s important to use other tools in tandem with trace data to make sure you’re getting the complete picture.

Using tools like these to track the quality of your data can help you improve anomaly detection in pipeline.

Data Pipeline Monitoring Metrics

When it comes to data pipeline monitoring, there are many important metrics to measure. However, not every situation requires you to measure the same metrics. The best types of data pipeline monitoring metrics to use depends on what kind of data observability you are trying to achieve.

Data observability can be used for different purposes depending on the scenario and the kind of organization. One example of a scenario in which you would need a high degree of data observability is when you’re monitoring a cloud-based data pipeline. For a cloud data pipeline, some of the most important data ingestion metrics to observe would include:

- Latency: the amount of time it takes to respond to a user request

- Traffic: the amount of demand

- Errors: the frequency with which your system fails

- Saturation: how close the system is to operating at full capacity

Carefully monitoring metrics like these would help ensure you don’t overlook a data pipeline performance issue in your cloud-based data pipeline.

Data Pipeline Monitoring Dashboard

Another great tool for improving your data observability is a data pipeline monitoring dashboard. A monitoring dashboard plays a very important role in a healthy data observability framework. Using a performance dashboard to aid with data pipeline observability can benefit your organization in a number of ways. Some of the ways you can use a data observability platform to monitor your data pipelines include:

- Predict incidents and prevent them before they happen.

- Automate data reliability across all data warehouses

- Optimize data pipeline costs

- Align your organization’s data strategies with its larger business strategies

- Track the journey your data takes from beginning to end

- Automatically identify drift and anomalies in your data pipelines

- Increase the efficiency and reliability of your data pipelines

Different kinds of observability dashboards are available from data observability vendors. It’s important to select the best monitoring dashboard for your organization’s needs. Using all the tools at your disposal, like a monitoring dashboard, to help improve your organization’s data observability is one of the best ways to prevent a data pipeline performance issue before it happens.

Data Pipeline Audit

Anytime you need to determine how well a particular system is functioning, it’s a good idea to carry out an audit that can tell you more about the system’s performance in detail. A data pipeline audit can help you verify the quality and reliability of the data coming in through your data pipelines. Your data pipeline architecture needs to be maintained with the right tests and monitoring tools to ensure it’s still providing accurate, high-quality data.

Following data pipeline architecture best practices is one thing you can do to make sure your data pipeline functions smoothly. However, no matter how diligent you are about sticking to best practices, you’ll likely still need to perform an audit from time to time. A data pipeline architecture diagram could be helpful for this task.

Every data pipeline example is vulnerable to anomalies. If you’re considering how to build a data pipeline, it’s important to take this fact into account. Carrying out periodic data pipeline audits and using a reliable data observability dashboard can help reduce the risk of errors in your data.

.png)

.webp)

.webp)